Suppose you’re a stock market analyst and want to keep a finger on the pulse of financial news. What would you use to extract this information? Web scraping! It makes the process easier. With Python, you can automate the extraction of headlines and insights from multiple news websites, helping you make more informed decisions in real time.

The ability to extract valuable data from websites has become an invaluable skill. Whether you’re a data enthusiast, a researcher, or a business professional seeking to gain a competitive edge, web scraping with Python opens up a world of opportunities. With its user-friendly libraries and a well-crafted strategy, you can extract data from virtually any website. This Python Web Scraper Guide is your gateway to this dynamic world of data acquisition.

Why is Web Scraping Used?

Web scraping is an invaluable strategy used for different purposes across various industries. Its captivation lies in its ability to gather real-time, vast, and diverse data from the internet. This data serves as a resource for insights, enabling businesses and individuals to make informed decisions, track trends, and gain a competitive edge.

For businesses, web scraping offers a means to monitor competitors, analyze market dynamics, and gather customer feedback. It can be a game-changer for e-commerce. It helps retailers adjust pricing strategies in response to market fluctuations.

Researchers and data enthusiasts rely on web scraping to collect information for academic studies, trend analysis, or simply to satisfy their curiosity. It’s also a valuable tool for journalists seeking to uncover stories and for professionals aiming to stay ahead in their fields.

The Legality of Web Scraping

The legality of web scraping lies in a delicate balance between technology, data ownership, and ethics. While web scraping, when used responsibly, can be a powerful tool for data acquisition, it can also cross legal boundaries.

Courts have often examined the nature and intent behind web scraping activities. Generally, scraping public data for personal use or research is less likely to raise legal issues. However, scraping sensitive or copyrighted content, violating website terms of service, or causing harm can lead to legal challenges.

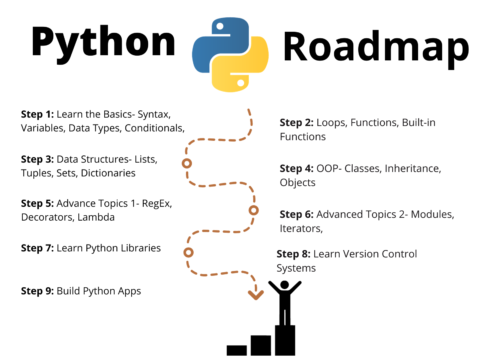

Why Python is Good for Web Scraping

The popularity of Python in web scraping can be attributed to its versatility and rich ecosystem of libraries. It has a simple and readable syntax that streamlines the process of making HTTP requests, parsing HTML, and extracting data.

Python’s libraries, including Requests and BeautifulSoup, provide user-friendly tools for these tasks. Furthermore, Python’s extensive community support and continuous development ensure that it remains a top choice for web scraping. Its adaptability and effectiveness in handling diverse web scraping scenarios make it the preferred language for both beginners and experienced web scrapers.

Getting Started with Python Web Scraping

Web scraping is the process of extracting data from websites. Python has a rich ecosystem of libraries, which makes this task remarkably accessible.

Here’s how to Scrape Data from any website with Python:

import requests

from bs4 import BeautifulSoupl

# Send an HTTP GET request to the news website

url = 'https://example-news-website.com'

response = requests.get(url)

# Parse the HTML content

soup = BeautifulSoup(response. text, 'HTML.parser')

# Extract headlines by finding relevant elements (e.g., h2 tags)

headlines = soup.find_all('h2')

# Print the extracted headlines

for the headline in headlines:

print(headline. text)Choose the Right Tools

Python has several libraries that are indispensable for web scraping, and you’ll want to start by installing them. The key libraries include:

- Requests: Used to make HTTP requests to the website and retrieve its HTML content.

- BeautifulSoup: Helps parse the HTML and extract the data you need.

You can install these libraries using pip with commands like pip install requests and pip install beautifulsoup4.

Inspect the Website

Before you begin scraping, open your target website in a web browser. You’ll need to inspect the website’s HTML structure to identify the data you want to extract. Modern browsers come with built-in developer tools that make this process straightforward.

For instance, let’s use this example website:

- Begin by visiting the website you want to scrape using your web browser.

- On the webpage, right-click on an element you’re interested in scraping, such as a headline, and select “Inspect” from the context menu. This will open the browser’s developer tools.

- In the developer tools, you’ll see the HTML structure of the webpage. It’s organized in a hierarchical tree structure with different HTML elements. Your goal is to locate the specific HTML tags and classes that contain the data you want to scrape.

- Carefully examine the HTML structure to identify the elements that hold the data you need. In the case of news headlines, you might find that headlines are often contained within <h2> or other header tags.

- Make a note of any specific attributes or classes associated with the elements that contain the data. These attributes will be essential for selecting the right elements in your web scraping code.

Make HTTP Requests

Using the Requests library, send an HTTP GET request to the website’s URL. This will fetch the HTML content of the webpage and store it in a variable for further processing.

import requests # Send an HTTP GET request to the website URL = 'https://example.com' response = requests.get(url) # Store the website's content in a variable html_content = response.text

Parse HTML

With the HTML content in hand, you can now use BeautifulSoup to parse the HTML and navigate through its elements. You can find specific elements by tag, class, or ID, making it easy to extract the desired data.

from bs4 import BeautifulSoup

# Parse the HTML content

soup = BeautifulSoup(html_content, 'HTML.parser')

# Extract data by finding elements

data = soup.find('div', class_='some-class').textData Extraction

The data you want might be within tags like <p>, <h2>, <a>, or any other HTML element. Use the appropriate methods to extract text, links, or other information.

# Extract headlines by finding relevant elements (e.g., h2 tags)

headlines = soup.find_all('h2')

Data Cleaning

Depending on the website’s structure, the extracted data might need some cleaning. You can remove unwanted characters, whitespace, or format it as needed.

Iterate and Paginate

If the data is spread across multiple pages, set up a loop to scrape multiple pages by modifying the URL or using pagination links.

Store Data

After extracting the data, you can save it to a file, such as CSV, JSON, or even a database, for further analysis or use.

Handle Errors and Rate Limiting

Implement error handling to address issues like failed requests. Also, consider adding delays between requests to avoid overwhelming the website’s server, which can result in being blocked.

Respect Robots.txt

Check the website’s robots.txt file to understand the limitations or guidelines for web scraping. Respect these rules to maintain good web scraping practices.

Legal and Ethical Considerations

Always ensure that your web scraping activities align with the website’s terms of service and comply with relevant laws and regulations.

Web scraping with Python is a powerful and accessible skill for anyone looking to extract data from the web. Python’s web scraping capabilities, when coupled with the right tools and techniques, will enable you to collect and utilize data from the web efficiently.