In this article, we are going to get insights into Reinforcement learning in Python which is an important subdomain of Artificial Intelligence. In recent years, there are a lot of improvements in this fascinating area of technology. One of the exciting examples includes DeepMind and the Deep Q learning architecture of Reinforcement Learning, beating the champion of the game of Go with AlphaGo.

What is Reinforcement Learning?

Reinforcement Learning is one of the Machine Learning methods that deal with how software agents should take action in an environment. The agent receives rewards for performing correct actions and receives penalties for performing incorrect actions. The core principle to note is that the agent learns without intervention from a human.

In reinforcement learning, and artificial intelligence model handles a game-like situation, which has trial and error to come up with a solution to the problem. The ultimate goal of the reinforcement model is to maximize the total reward starting from totally random trials and finishing with better results.

Important Terms in Reinforcement Learning

Let us look into some of the important and most common terms used in Reinforcement Learning

- Agent – It is an assumed AI entity that performs actions in an environment to gain reward.

- Environment – A scenario or situation that an agent has to face.

- Reward – A bonus given to an agent when it performs a specific action or task correctly.

- State – State refers to the current situation in the environment.

- Policy – It is a strategy that the agent applies to decide the following action based on the current state.

- Value – It is expected long-term reward with discount, as compared to the short-term reward.

Comparison of Reinforcement learning with Supervised and Unsupervised learning

Reinforcement learning differs from supervised learning in the way that in supervised learning the training data has the labeled data so the model is trained with the exact outcome itself whereas, in reinforcement learning, there is no such labeled data. Since there is no labeled data, the reinforcement agent decides how to perform the given task and the agent can learn by its experience only.

In Unsupervised learning, there is no labeled data like Reinforcement Learning but the difference is that there is no reward policy in Unsupervised Learning. Unsupervised Learning algorithms group data based on similarities but Reinforcement Learning algorithm deals with the behavior of the agent.

Types of Reinforcement Learning

There are two types of reinforcement learning methods, positive and negative. What do we do when a child does mistakes? We just correct them to make sure that they do not repeat the bad behavior, which is an example of negative learning. On the other hand, if a child does something good, we will reward the child to develop good behavior, which is an example of negative learning. Let’s discuss more the positive and negative learning.

Positive Learning

Positive Learning is defined as an event, that occurs because of specific behavior. It increases the strength and the frequency of the behavior and impacts positively on the action taken by the agent. This type of Reinforcement helps you to maximize performance and sustain change for a more extended period. However, too much Reinforcement may lead to over-optimization of the state, which can affect the results.

Negative Learning

Negative Reinforcement is defined as the strengthening of behavior that occurs because of a negative condition that should have been stopped or avoided. It helps you to define the minimum stand of performance. However, the drawback of this method is that it provides enough to meet up the minimum behavior.

Reinforcement learning algorithms

The field of reinforcement learning is made up of several algorithms that each take different approaches. The differences are mainly due to their strategies for exploring their environments. Some of the important Reinforcement learning algorithms are listed as follows.

Q-learning

In this reinforcement learning algorithm, the agent receives no policy, meaning its exploration of its environment is more self-directed. The policy is essentially a probability that tells it the odds of certain actions resulting in rewards, or beneficial states.

Q-learning is a model-free reinforcement learning that tends to find the best course of action, given the current state of the agent. Model-free means that the agent uses predictions of the environment’s expected response to move forward. It does not use the reward system to learn, but rather, trial and error.

Depending on the current state of the agent in the environment, it will decide the next action to be taken. The main objective of the model is to find the best possible action given its current state. For that, it may come up with rules of its own or it may operate outside the policy given to it to follow. This means that there is no actual need for a policy to the model, so we call it off-policy.

An example of Q-learning is a Product recommendation system. In a normal product recommendation system, the suggestions we get are based on your previous purchases or websites we may have visited. If we have looked for a western dress sometime before, then the model will recommend different collections of western wear from different brands.

State action reward state action (SARSA)

This reinforcement learning algorithm starts by giving the agent what’s known as a policy. The q-Learning technique is an Off Policy technique and uses the greedy approach to learn the Q-value. SARSA technique, on the other hand, is an On Policy and uses the action performed by the current policy to learn the Q-value.

Deep Q-Networks

These algorithms utilize neural networks in addition to reinforcement learning techniques. The main objective of Q-learning is to learn the policy which can inform the agent what actions should be taken for maximizing the reward under what circumstances. Future actions are based on a random sample of past beneficial actions learned by the neural network.

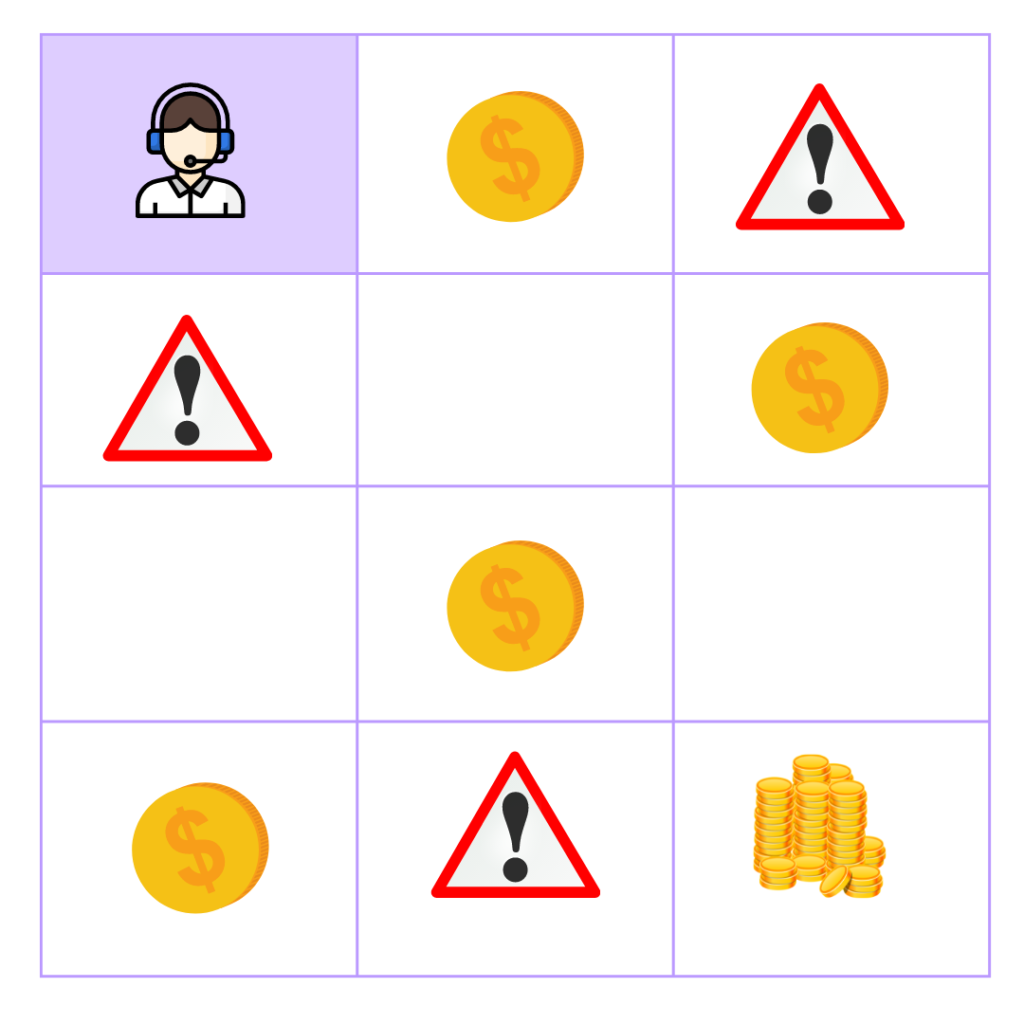

Example of Reinforcement Learning

Consider, that there is an AI agent present within a maze environment, and its goal is to find a reward. The agent interacts with the environment by performing some actions, and based on those actions, the state of the agent gets changed, and it also receives a reward or penalty as feedback.

The agent continues doing these three things which are taking action, changing state/remaining in the same state, and getting feedback. By doing these actions, the agent learns what actions lead to positive feedback or rewards and what actions lead to negative feedback penalty. As a positive reward, the agent gets a bonus point, and as a penalty, it gets a negative point.

Implementation of Reinforcement Learning in Python

Let’s do a simple implementation of Reinforcement Learning in Python to understand the concepts of RL which we discussed so far in a better way. We can either use Google Colab(https://colab.research.google.com/ ) for implementation or install the random package and code on the local system with Python software.

To check if the action taken by the agent was correct or wrong, some logic needs to be involved. But, here, let’s choose one of the rewards randomly using the random package.

#Importing the random package

import randomWe use two classes, Environment and Agent in our model.

The environment class represents the agent’s environment. The class must have member functions to get the current observation or state where the agent is, what are the points for reward and punishment, and keep track of how many more steps are left that the agent can take before the game is over. In this example, consider a game that the agent must finish in at most ten steps.

#Creating the Environment class

class Environment:

def init(self):

self.steps_left=10

def get_observation(self):

return [1.0,2.0,1.0]

def get_actions(self):

return [-1,1]

def check_is_done(self):

return self.steps_left==0

def action(self,int):

if self.check_is_done():

raise Exception("Game over")

self.steps_left-=1

return random.random()The agent class is simpler compared to the environment class. The agent collects rewards given to it by its environment and makes an action. For this, we will need a data member and a member function.

#Creating the Agent class

class Agent:

def init(self):

self.total_rewards=0.0

def step(self,ob:Environment):

curr_obs=ob.get_observation()

#print(curr_obs,end=" ")

curr_action=ob.get_actions()

#print(curr_action)

curr_reward=ob.action(random.choice(curr_action))

self.total_rewards+=curr_reward

#print("Total rewards so far= %.3f "%self.total_rewards)Until the game is not over, which is checked by the while loop, the agent takes an action by invoking the step function of the Agent class by passing obj which refers to the agent’s environment. The reward here can be positive(in case of 1) or negative(in case of -1) and will be added to the total rewards of the agent.

if name=='main':

obj=Environment()

agent=Agent()

step_number=0

while not obj.check_is_done():

step_number+=1

#print("Step-",step_number, end=" ")

agent.step(obj)

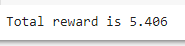

print("Total reward is %.3f "%agent.total_rewards)Output:

On executing the code, we will get the rewards accumulated by the agent in each and every step up to 10 steps. The output differs with each time we play the game.

Complete code for Reinforcement Learning in Python

#Importing the random package

import random

#Creating the Environment class

class Environment:

def _init_(self):

self.steps_left=10

def get_observation(self):

return [1.0,2.0,1.0]

def get_actions(self):

return [-1,1]

def check_is_done(self):

return self.steps_left==0

def action(self,int):

if self.check_is_done():

raise Exception("Game over")

self.steps_left-=1

return random.random()

#Creating the Agent class

class Agent:

def _init_(self):

self.total_rewards=0.0

def step(self,ob:Environment):

curr_obs=ob.get_observation()

#print(curr_obs,end=" ")

curr_action=ob.get_actions()

#print(curr_action)

curr_reward=ob.action(random.choice(curr_action))

self.total_rewards+=curr_reward

#print("Total rewards so far= %.3f "%self.total_rewards)

if _name=='main_':

obj=Environment()

agent=Agent()

step_number=0

while not obj.check_is_done():

step_number+=1

#print("Step-",step_number, end=" ")

agent.step(obj)

print("Total reward is %.3f "%agent.total_rewards)Applications of Reinforcement Learning

- Reinforcement Learning solves a specific type of problem where decision-making is sequential, and the goal is to build complex models, such as games.

- RL can be used in robotics for industrial automation.

- RL can be effectively used in machine learning and data processing.

- RL is used in Business strategy planning.

Conclusion

In this article, we have learned about the idea of reinforcement learning, types of Reinforcement Learning, interesting applications of reinforcement learning, important terminologies involved in reinforcement learning, Reinforcement Learning Algorithms, and basic-level implementation of reinforcement learning in Python. Reinforcement learning is no doubt a cutting-edge technology that has the potential to transform the world of technology.

I hope you found this article helpful. Comment down if you have any doubts or want an article from us.

Thank you for visiting our website.

Also Read:

- Flower classification using CNN

- Music Recommendation System in Machine Learning

- Create your own ChatGPT with Python

- Bakery Management System in Python | Class 12 Project

- SQLite | CRUD Operations in Python

- Event Management System Project in Python

- Ticket Booking and Management in Python

- Hostel Management System Project in Python

- Sales Management System Project in Python

- Bank Management System Project in C++

- Python Download File from URL | 4 Methods

- Python Programming Examples | Fundamental Programs in Python

- Spell Checker in Python

- Portfolio Management System in Python

- Stickman Game in Python

- Contact Book project in Python

- Loan Management System Project in Python

- Cab Booking System in Python

- Brick Breaker Game in Python

- Tank game in Python

- GUI Piano in Python

- Ludo Game in Python

- Rock Paper Scissors Game in Python

- Snake and Ladder Game in Python

- Puzzle Game in Python

- Medical Store Management System Project in Python

- Creating Dino Game in Python

- Tic Tac Toe Game in Python

- Test Typing Speed using Python App

- MoviePy: Python Video Editing Library