Introduction

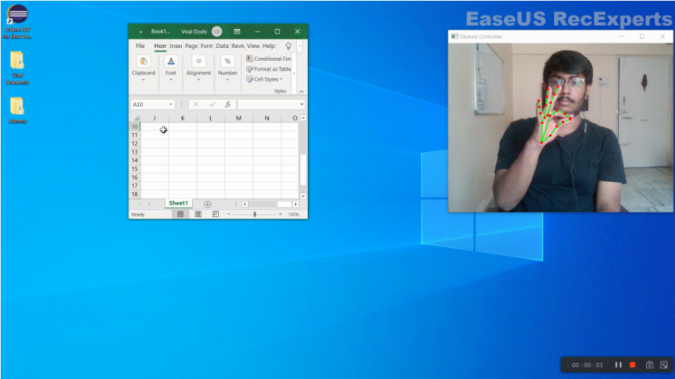

Gesture Controlled Virtual Mouse makes human-computer interaction simple by making use of Hand Gestures and Voice Commands. The computer requires almost no direct contact. All i/o operations can be virtually controlled by using static and dynamic hand gestures along with a voice assistant. This project makes use of state-of-art Machine Learning and Computer Vision algorithms to recognize hand gestures and voice commands, which works smoothly without any additional hardware requirements. It leverages models such as CNN implemented by MediaPipe running on top of pybind11. It consists of two modules: One which works directly on hands by making use of MediaPipe Hand detection, and the other which makes use of Gloves of any uniform color. Currently, it works on the Windows platform.

Video Demonstration: link

Note: Use Python version: 3.8.5

Gesture Recognition:

Neutral Gesture

Move Cursor

Left Click

Right Click

Double Click

Scrolling

Drag and Drop

Multiple Item Selection

Volume Control

Brightness Control

Getting Started

virtual mouse using hand gesture

Now we will see all the requirements we need to run this virtual mouse using the hand gestures in our system, below I have mentioned all the requirements.

Pre-requisites

Python: (3.6 – 3.8.5)

Anaconda Distribution: To download click here.

Procedure

git clone https://github.com/xenon-19/Gesture-Controlled-Virtual-Mouse.git

For detailed information about cloning visit here.

Step 1:

conda create --name gest python=3.8.5

Step 2:

conda activate gest

Step 3:

pip install -r requirements.txt

Step 4:

conda install PyAudio

conda install pywin32

Step 5:

cd to the GitHub Repo till src folder

The command may look like this: cd C:\Users\.....\Gesture-Controlled-Virtual-Mouse\src

Step 6:

For running Voice Assistant:

python Proton.py

( You can enable Gesture Recognition by using the command “Proton Launch Gesture Recognition” )

Or to run only Gesture Recognition without the voice assistant:

Uncomment the last 2 lines of code in the file Gesture_Controller.py

python Gesture_Controller.py

Also Read:

- Flower classification using CNN

- Music Recommendation System in Machine Learning

- Create your own ChatGPT with Python

- Bakery Management System in Python | Class 12 Project

- SQLite | CRUD Operations in Python

- Event Management System Project in Python

- Ticket Booking and Management in Python

- Hostel Management System Project in Python

- Sales Management System Project in Python

- Bank Management System Project in C++

- Python Download File from URL | 4 Methods

- Python Programming Examples | Fundamental Programs in Python

- Spell Checker in Python

- Portfolio Management System in Python

- Stickman Game in Python

- Contact Book project in Python

- Loan Management System Project in Python

- Cab Booking System in Python

- Brick Breaker Game in Python

- Tank game in Python

- GUI Piano in Python

- Ludo Game in Python

- Rock Paper Scissors Game in Python

- Snake and Ladder Game in Python

- Puzzle Game in Python

- Medical Store Management System Project in Python

- Creating Dino Game in Python

- Tic Tac Toe Game in Python

- Test Typing Speed using Python App

- MoviePy: Python Video Editing Library