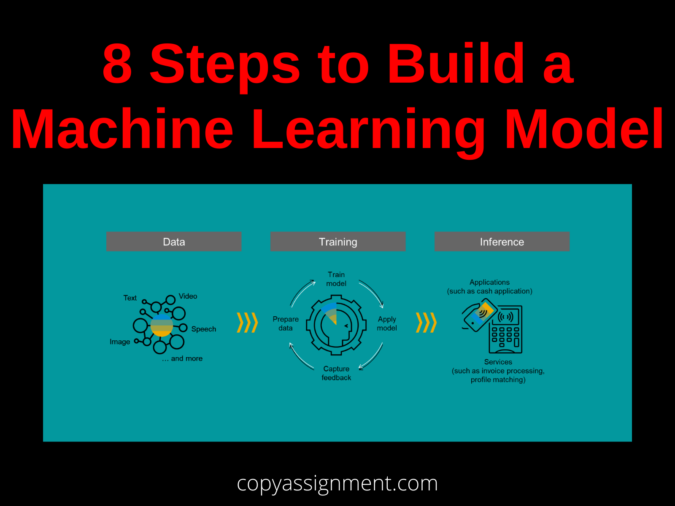

In this article, we will see the steps in machine learning model formation. Let us first understand what is Machine Learning. It is a field of Artificial Intelligence, where humans train the system to learn, analyze and make decisions. Machine Learning is an important part of Data Science. The Machine Learning algorithm or the model improves its efficiency over time by learning from the experience.

To learn the overview of Machine Learning – https://copyassignment.com/machine-learning-a-gentle-introduction/

Machine learning models have wide applications in many fields. Some of the widely used applications are Email spam filtering, Web search results, pattern and image recognition, Video recommendation, and so on. Machine Learning becoming a more widely accepted and adapted technology in many fields. The applications of Machine learning models are widespread in the fields of health care, banking, e-commerce, and so on. Most social media and content-delivering networks use Machine Learning algorithms to provide a more personalized and enjoyable experience.

To build any Machine Learning model, there are 8 steps to follow. It is not necessary to build a model in machine learning with all those steps, you can also skip one or two steps according to your model. But for better accuracy, follow all these steps. The steps in machine learning model building are as follows.

- Understand the problem

- Collect and Process the data

- Split the data

- Choose appropriate model

- Train the model

- Evaluate the model

- Hyperparameter Tuning

- Prediction

Let us see how to build a Machine Learning model following these steps.

Understand the problem

Before starting to build any machine learning model, first try to analyze and understand the purpose for which you are building the model. It would help to choose the appropriate algorithm for our model and also gives better results. If you understand the problem clearly, you can able to list some potential solutions to test in order to generate the best model. Understand that you have to try out a few solutions before you land on a good working model.

To understand the steps more clearly, let us consider the example of identifying fruits by their color, shape, and size. This basic example is to understand the process in a simple way. For our model, we have different parameters to classify a fruit. We can add more features to get better results. For the sake of simplicity, we have taken three different parameters to identify the fruit. The first feature is the color of the fruit, the second one is the shape of the fruit and the last one is the size of the fruit. Using these features our model will identify the name of the fruit.

It is good to have basic knowledge of the field in which you are developing the model. For example, if you are developing a model for credit card fraud detection, you should learn to understand how the industry operates and analyze the problem completely to build a better model.

Data Collection and Data Preprocessing

The collection of data is the foundation of the Machine Learning process. The better the collection of data, the better will be the model. Choosing incorrect features or Choosing limited features for the dataset may reduce the efficiency of the model. So it is very important to concentrate on the dataset before starting to build the model. If you want to build a model with a sample dataset, there are a lot of datasets already available at https://www.kaggle.com/. So, you can use the dataset required for your model from there.

Color Shape Size Fruit Name

Red Conical Small Strawberry

Orange Round Medium Orange

Green Ellipsoidal Medium Guava

Red Round Conical Medium Apple

Green Oval Large Watermelon

The dataset used in the Machine Learning model is simply an M x N matrix, where M is the columns (features) and N is the rows (samples). It can be further broken into X (independent variables) and Y (dependent variables).

Exploratory Data Analysis

Exploratory Data Analysis (EDA) is done in order to gain an understanding of the dataset. Some of the common approaches for EDA include:

- Data visualization – Scatter plot, heat map, box plot, etc.

- Descriptive statistics – Mean, median, Standard deviation, etc.

Once we have analyzed data with suitable features, the next step is to preprocess the data for further steps. The quality of data will have a huge impact on the quality of the model. Data preprocessing is a technique to convert the data collected into a clean dataset. It is one of the vital and important steps in building a machine-learning model. It is also known as data wrangling or data cleaning.

Some datasets contain missing values, duplicate values, or incorrect data. In those cases, remove a specific row that has a null value for a feature or a particular column where the values are missing. Or calculate the mean of a particular row that contains a missing value and replace the result for the missing value. Most datasets have a large number of features. Which increases planes, it is difficult to model and visualize. The volume of data is reduced by methods like Principal Component Analysis (PCA) and SVD.

Feature Scaling

It is the final step in the data preprocessing phase. The learning algorithms perform much better when the features are on the same scale. A well-prepared data always gives better results. So It is important to fine-tune the data at each and every step of building the model. The most common techniques used in feature scaling are:

- Normalization – It is a method to rescale the features of the data within a specific range mostly [0,1]. To normalize the data min-max scaling method is applied to each feature column.

Xchanged = ( X – Xmin )/ ( Xmax – Xmin )

- Standardization – It centers the feature columns at mean 0 and standard deviation 1 so that the feature columns have the same scale. It keeps the information about outliers and makes the model less sensitive to them.

Z = ( X-μ )

where, μ – Mean, σ – Standard deviation

Split the data

The next important step is to explore the dataset and divide the dataset into training and testing data. The dataset for the Machine Learning model must be split into two separate sets – training and test set.

The training data denotes the subset of a dataset used for training the machine learning model. Here we already know the output. The testing data is the subset of the dataset, used for testing the machine learning model. The Machine Learning model predicts the outcomes of the test dataset. The breaking of data should be 80:20 or 70:30 ratio approximately. The larger part is for training purposes and the smaller part is for testing purposes. This is more important because using the same data for training and testing would not produce good results.

One more common approach is to split the data into 3 portions training data, validation data, and testing data. As explained before, the training set is used to train the model, the validation set is used for evaluation where model tuning (like hyperparameter tuning) is done. The testing set can be used to further test the model.

Cross-Validation

In order to use the data effectively, N-fold cross-validation is used in splitting the data. Here data is split into N folds. For example in a 10-fold CV, 2 folds are used for training and the remaining 8 folds are used for the training phase. iteratively all the folds get interchanged such that every fold gets a chance to be testing data. So a total of 10 models can be built using this can performance metrics values will be calculated for those models. And the final decision will be made after analyzing the performance metrics.

Choose appropriate model

After segregating the data, our next work is to find a good algorithm suited for our model. This is one of the most important steps in machine learning. There are various existing models and algorithms are there to use. Our job is to find an appropriate algorithm from the variety of options over there. There are three types of Machine Learning Models – Supervised Learning, Unsupervised Learning, and Reinforcement Learning.

Supervised Learning – deals with training the model with labeled data. The outcome is known, so the model is continuously refined to get the accuracy. Our sample data with fruits fall under this category since we already know the outcome. Linear Regression Algorithm can be used to build a model with our data. Some of the common algorithms in Supervised Learning are Linear regression, Logistic regression, Polynomial regression, Random forest, Decision tree, K-nearest neighbors, and Naive Bayes. We have the data with predefined output to train the model in the case of Supervised Learning.

Unsupervised Learning – If the label data is not available and the outcome is unknown it falls under the category of Unsupervised Learning. This algorithms clusters and groups the data into categories. Some of the algorithms used in Unsupervised Learning are Principal component analysis, K-means clustering, Apriori Algorithm, Partial least squares, Fizzy means, Hidden Markov models, and Hierarchical clustering.

Reinforcement Learning(click here to read in detail) – learns and makes decisions based on trial and error method. A common example of the Reinforcement Learning algorithm is Markov’s decision process.

There are various algorithms available for various purposes of the model. Some algorithms are suited for dealing with text, some for images, and much more. Choose an appropriate algorithm to build your model after analyzing the dataset and aim to build the model. We can implement our model to distinguish fruits with Linear Regression since we are dealing with 3 independent variables to predict the outcome.

Train the model

The main process of building our model starts with the training phase. Here we use the split part of the data set allocated for training to make our model learn. Our model learns to identify fruits by analyzing their characteristics. Our 3 features have a coefficient called the weight of features. And the constant is known as the bias of the model. First, we pick random values and compare them with actual output, and then the difference can be reduced by trying different biases and values. Repeat the iteration until the model reaches a decent amount of accuracy.

You should spend a quality amount of time during the training phase. The more you tune and prepare the model during training, the better will be the results. This phase requires a lot of patience and experimentation. If you succeed in training your model well, then you can expect good results from your model.

The most important problems considered during the training of models are optimization and generalization.

- Optimization – is defined as the process of adjusting the model to get the best performance possible on training data i.e. the learning process.

- Generalization – is said to be how well the model performs on unseen data. The main goal is to get the best generalization possible.

Let’s understand two important terms before moving further steps in the Machine Learning model.

Bias – They are the assumptions made by the model to make a function easy to learn

Variance – After training data and obtaining low error. Upon changing the data, then training the same model and experiencing a high error, is known as a variance.

Overfitting and Underfitting

A model is said to be under-fitted when it cannot capture the underlying data. It denotes that our algorithm or model does not fit the data well. It happens when we have less data to build the model. Overfitting simply means high bias and low variance. Underfitting reduces the accuracy of the model.

Underfitting can be reduced by

- Increasing the model complexity

- Increasing the number of features

- Removing the noise from the data

- Increasing the duration of training

A model is said to be over-fitted when we train with a lot of data. This happens when the model gets trained with so many inaccurate data entries and noise. Overfitting simply means high variance and low bias.

Overfitting can be reduced by

- Reducing model complexity

- Increasing the training data

- Ridge Regularization and Lasso Regularization

- Using dropout for neural networks to tackle overfitting.

Evaluate the model

After training the model with trained data, the model has to be tested. The purpose of testing is to evaluate how the model will work in real-world scenarios. We can evaluate the accuracy of the model during this phase. In our case, the model tries to identify the type of fruit with the learning done in the previous phase. The evaluation phase is very important and we can check whether the model achieves the goal we planned. If the model does not perform well up to mark during the testing phase then the previous steps have to be re-iterated until we attain the required accuracy. As I mentioned earlier we should not use the same data used during the training phase. The separate data splitter from our dataset should be used for evaluation.

Regression metrics

regression models deal with a continuous range of values instead of classes. Mean squared error in the model is calculated by taking the average of squared differences between the predicted output and the actual output. Learning curves are plotted with training data and validation data. If our model gives high bias, we can come to the conclusion of having errors in the validation and training datasets. If the model suffers from high bias, training is to be done more to improve the model.

Classification metrics

Classification models are concerned only with whether the outcome is correct or not. When performing classification predictions like our model, there are four possible outcomes that could be expected. They are true positives, true negatives, false positives, and false negatives. These four outcomes are plotted on a confusion matrix. You can generate the matrix after predictions on the test data and categorize each prediction as one of the possible outcomes. The accuracy of the model is the percentage of correct predictions made by the test data. The accuracy of the model can be evaluated by dividing the number of correct predictions by the number of total predictions. There are other metrics like precision and recall that are also used to evaluate Classification models.

Hyperparameter tuning

Once the evaluation is successful, proceed to the next phase Parameter tuning. This step in the machine learning model improves the results gained during the evaluation step. A Hyperparameter of the model is a configuration that is external to the model and whose value cannot be estimated from the data provided. They are used in processes to help estimate model parameters, they are tuned for a given predictive modeling problem and they can often be set using heuristics.

Some of the examples in model hyperparameters include the C and sigma hyperparameters for support vector machines, K in K-nearest neighbors, learning rate for training a neural network, and the penalty in Logistic Regression Classifier. The model parameters are estimated from data automatically and model hyperparameters are set manually and are used in processes to help estimate model parameters.

Hyperparameter tuning is choosing a set of optimal hyperparameters for a learning algorithm. Model hyperparameters are often referred to as parameters because they are the parts of machine learning that must be set manually and tuned.

In our case, we can make our model recognize fruits better by hyperparameter tuning. There are multiple ways we can improve and tune the model. You can revisit the training phase and use multiple sweeps of data to train the model. This can lead to better accuracy and also the long duration of training provides better accuracy and results. You can also refine the initial values given to the model. There are many other parameters we can tune and achieve desired results.

Prediction

The final step in machine learning model building is prediction. This is the phase where our model can be considered to be ready for applications. Our fruit model should be able to identify the name of the fruit. The model is given independence from human interference and it exposes its conclusion on the basis of the datasets and the training given. We succeed in the Machine Learning model If it can able to perform well in different scenarios.

This step is executed by the end-users when they use the particular model in the respective domain. Machine Learning models can process large amounts of data and make decisions. The model we build to find fruits is very simple yet the steps can be easily understood with the help of that.

We have covered some important steps to build a machine-learning model in this article.

Also Read:

- Flower classification using CNN

- Music Recommendation System in Machine Learning

- Create your own ChatGPT with Python

- Bakery Management System in Python | Class 12 Project

- SQLite | CRUD Operations in Python

- Event Management System Project in Python

- Ticket Booking and Management in Python

- Hostel Management System Project in Python

- Sales Management System Project in Python

- Bank Management System Project in C++

- Python Download File from URL | 4 Methods

- Python Programming Examples | Fundamental Programs in Python

- Spell Checker in Python

- Portfolio Management System in Python

- Stickman Game in Python

- Contact Book project in Python

- Loan Management System Project in Python

- Cab Booking System in Python

- Brick Breaker Game in Python

- Tank game in Python

- GUI Piano in Python

- Ludo Game in Python

- Rock Paper Scissors Game in Python

- Snake and Ladder Game in Python

- Puzzle Game in Python

- Medical Store Management System Project in Python

- Creating Dino Game in Python

- Tic Tac Toe Game in Python

- Test Typing Speed using Python App

- MoviePy: Python Video Editing Library

Browse Post Tags:

create your own machine learning model

build a deep learning model from scratch

building a model in machine learning

you are building a machine learning model

build machine learning model python

build a machine learning model in python

building deep learning models with tensorflow

build a predictive model in python

create ml models with bigquery ml

model building machine learning

building a machine learning model

create machine learning models

building machine learning models

model building in machine learning

build machine learning model