In the Machine Learning series, following a bunch of articles, in this article, we are going to learn about the Naive Bayes Algorithm in detail. This algorithm is simple as well as efficient in most cases. Before starting with the algorithm get a quick overview of other machine learning algorithms.

What is Naive Bayes?

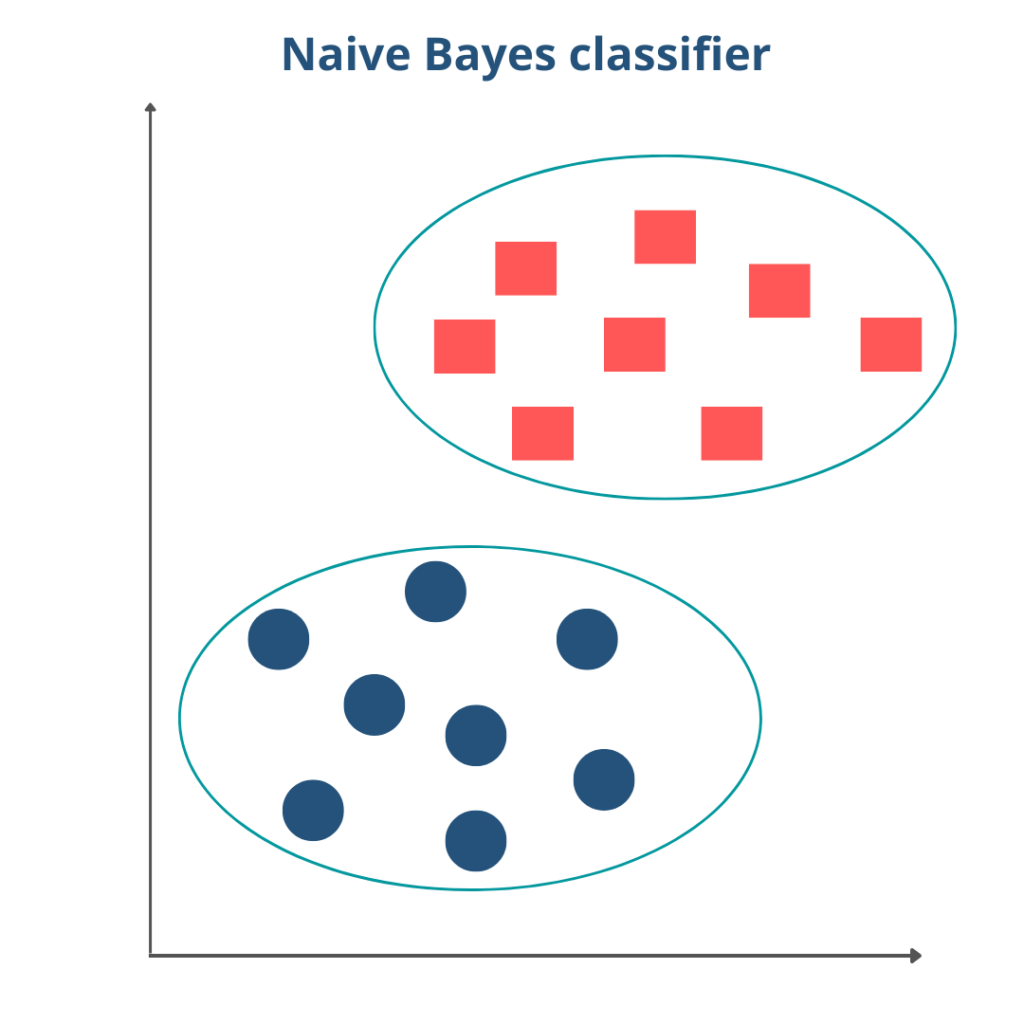

Naive Bayes classifiers are a collection of classification algorithms based on the Bayes Theorem. We cannot say Naive Bayes is a single algorithm, it is actually a family of algorithms where all of them share a common principle, i.e. every pair of classified features is independent of each other. This Classifier is one of the simple and most effective Classification algorithms which helps in building fast machine learning models that can make quick predictions.

The naive Bayes model is easy to build and particularly useful for very large data sets. This algorithm is mostly used in sentiment analysis, spam filtering, recommendation systems, etc.

Working of Naive Bayes

Naive Bayes is a classification technique based on the Bayes Theorem with an assumption of independence among the predictors. In simple terms, a Naive Bayes classifier assumes that the presence of a particular feature in a class is unrelated to the presence of any other feature.

For example, a fruit may be considered to be a mango if it is yellow, round, and about 3 inches in diameter. Even if these features depend on each other or upon the existence of the other features, all of these properties independently contribute to the probability that this fruit is an apple and that is why it is known as Naive.

Now, we will discuss the steps which are followed while building a Naive Bayes Algorithm.

Step 1: First, convert the data set into a frequency table, with the number of occurrences.

Step 2: Then, create a Likelihood table by finding the probabilities of the predictors.

Step 3: Now, use the Naive Bayesian equation to calculate the posterior probability for each class.

Step 4: The class with the highest posterior probability is the outcome of the prediction.

Bayes Theorem

We already know that the Bayes theorem is the foundation of the Naive Bayes Algorithm. Bayes’ Theorem is a simple mathematical formula used for calculating conditional probabilities. Conditional Probability finds the probability of an event occurring given the probability of another event that has already occurred. Bayes’ theorem is stated mathematically as the following equation:

P(A|B) is Posterior probability – Probability of hypothesis A on the observed event B.

P(B|A) is Likelihood probability – Probability of the evidence given that the probability of a hypothesis is true.

P(A) is Prior Probability – Probability of hypothesis before observing the evidence.

P(B) is Marginal Probability – Probability of Evidence.

Bayes’s theorem calculates the conditional probability of the occurrence of an event based on prior knowledge of conditions that might be related to the event.

Example of Naive Bayes

Let’s understand the Naive Bayes by an example. We assume that we have two coins, and the first two probabilities of getting two tails and at least one head are computed from the sample space. Now in this sample space, let A be the event that the second coin is the tail, and B be the event that the first coin is the head. Again, we reversed it because we want to know what the second event is going to be.

Now, we will find the Probability of A given B

P(A|B) = [ P(B|A) * P(A) ] / P(B)

= [ P(The first coin being head given the second coin is the tail) * P(Second coin being tail) ] / P(First coin being head)

= [ (1/2) * (1/2) ] / (1/2)

P(A|B) = 1/2 = 0.5

Types of Naive Bayes

There are three types of Naive Bayes such as Gaussian Naive Bayes, Multinomial Naive Bayes, and Bernoulli Naive Bayes.

Gaussian Naive Bayes

In Gaussian Naive Bayes, when the predictors take up a continuous value and are not discrete, we assume that these values are sampled from a gaussian distribution.

Multinomial Naive Bayes

In multinomial Naive Bayes, the features or predictors used by the classifier are the frequency of the words present in the document. This type of Naive Bayes is mostly used for document classification problems i.e whether a document belongs to the category of sports, politics, technology, etc.

Bernoulli Naive Bayes

Bernoulli Naive Bayes is similar to the multinomial Naive Bayes but the predictors are boolean variables. The parameters that we use to predict the class variable take up only values of yes or no. For example, if a word occurs in the text or not.

Implementation of Naive Bayes in Python

Let’s start the implementation of the Naive Bayes, by importing the iris dataset that will be used for our project. Next, we will load our iris dataset from the sklearn library into the code.

# Importing the iris dataset from sklearn library

from sklearn.datasets import load_iris

# Loading the iris dataset

dataset = load_iris()After loading the data, assign the independent and outcome variable.

# Store the independent variables (X) and outcome (y)

X = dataset.data

y = dataset.targetIn order to determine if our model performs well on data other than training data, we can split our data into two parts, one that we’ll use to train our model called training data, and one that we’ll use to test the performance of our model called testing data. train_test_split method does exactly that we give it our data and test_size i.e. ratio of data to be used as test data.

# Importing the train_test_split method

from sklearn.model_selection import train_test_split

# Splitting the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=1)Now that we have our training and testing data let’s create our GaussianNB() object and train it on the training data. To train the data we use the fit() method like always. Let’s do it.

# Training the model

from sklearn.naive_bayes import GaussianNB

model = GaussianNB()

model.fit(X_train, y_train)

# Making predictions on the testing data

y_pred = model.predict(X_test)Now let’s check how accurate the model is by finding the accuracy score for the testing dataset. Accuracy means comparing actual response values (y_test) with predicted response values (y_pred). For this model, it came out to be 0.95 meaning that about 95% of predictions were correct.

# Importing accuracy_score method

from sklearn.metrics import accuracy_score

# Calculating the accuracy score

print(accuracy_score(y_test, y_pred))So, we have successfully built our Naive Bayes model with the sklearn library in python. You can experiment with other datasets to gain a good understanding of the algorithm. Following this, we will discuss the pros and cons of the Naive Bayes classifier.

Advantages of Naive Bayes

- Naïve Bayes is one of the fast and easy ML algorithms to predict a class of datasets.

- It can be used for Binary as well as Multi-class Classifications.

- It performs well in Multi-class predictions as compared to the other Algorithms.

Disadvantages of Naive Bayes

- If a categorical variable has a category, which was not observed in the training data set, then the model will assign a 0 (zero) probability and will be unable to make a prediction. This is often known as “Zero Frequency”.

- Naive Bayes is also known as a bad estimator, so the probability outputs are not to be taken too seriously.

- Naive Bayes assumes that all features are independent or unrelated, so it cannot learn the relationship between features.

Conclusion

In this article, we gained an overview of a Machine learning algorithm, Naive Bayes in detail. We discussed its working, example, implementation in python, and finally its pros and cons.

Thank you for visiting our website.

Also Read:

- Flower classification using CNN

- Music Recommendation System in Machine Learning

- Top 15 Python Libraries For Data Science in 2022

- Top 15 Python Libraries For Machine Learning in 2022

- Setup and Run Machine Learning in Visual Studio Code

- Diabetes prediction using Machine Learning

- 15 Deep Learning Projects for Final year

- Machine Learning Scenario-Based Questions

- Customer Behaviour Analysis – Machine Learning and Python

- NxNxN Matrix in Python 3

- 3 V’s of Big data

- Naive Bayes in Machine Learning

- Automate Data Mining With Python

- Support Vector Machine(SVM) in Machine Learning

- Convert ipynb to Python

- Data Science Projects for Final Year

- Multiclass Classification in Machine Learning

- Movie Recommendation System: with Streamlit and Python-ML

- Getting Started with Seaborn: Install, Import, and Usage

- List of Machine Learning Algorithms

- Recommendation engine in Machine Learning

- Machine Learning Projects for Final Year

- ML Systems

- Python Derivative Calculator

- Mathematics for Machine Learning

- Data Science Homework Help – Get The Assistance You Need

- How to Ace Your Machine Learning Assignment – A Guide for Beginners

- Top 10 Resources to Find Machine Learning Datasets in 2022

- Face recognition Python

- Hate speech detection with Python