In this article, we are gonna build a cool project which is the MNIST handwritten digit classification using deep learning. Even if you have little knowledge of deep learning this project will help you understand the concepts better and in a simple way. With that note let’s start building the project.

Handwritten digit classification

The handwritten digit classification is the ability of the model to recognize the human handwritten digits. The handwritten digits may have different size and orientation since it differs from person to person in writing style. Hence it is a hard task for machines to recognize handwritten digits. Handwritten digit classification is the solution to this problem which uses the image of a digit and recognizes the digit present in the image.

MNIST Handwritten Digit dataset

The MNIST(Modified National Institute of Standards and Technology) Handwritten Digit dataset is one of the beginner-friendly datasets to practice deep learning. The images of the MNIST dataset include handwritten digits total of 70,000 images. The dataset is divided into 60,000 examples in the training set and 10,000 examples in the testing set. The MNIST dataset has 10 different classes with labeled images from 10 digits (0 to 9). The dataset can be used for the purpose of learning and exploring to build a model using convolutional neural networks.

Prerequisites for the project

For developing this project the user should have the system with Python 3 software installed. Then install the necessary libraries required for this project using the following commands one by one.

pip install numpy,

pip install tensorflow

pip install keras

pip install pillowAnother way to execute the project is to set up Jupyter Notebook with tensorflow installed. If your system is not compatible with the mentioned ways, then you can use Google Colab https://colab.research.google.com/ for developing the project.

Steps to build the model

The steps in building the deep learning model for the MNIST handwritten digit classification are as follows.

- Importing libraries

- Loading the data

- Image Processing

- Building the neural network

- Training the model

- Evaluating the results

Importing the Dependencies

In this step, we are going to import the required libraries to build our model. The required libraries for the project are numpy, matplotlib, seaborn, computer vision, pillow, tensorflow, and keras.

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import cv2

from google.colab.patches import cv2_imshow

from PIL import Image

import tensorflow as tf

tf.random.set_seed(3)

from tensorflow import keras

from keras.datasets import mnist

from tensorflow.math import confusion_matrixLoading the dataset

We know that all the images in the dataset are pre-aligned, which means each image only contains a hand-drawn digit. So, all the images have the same size of 28×28 pixels, and also we know that the images are grayscale. Therefore, we can load the images directly. The MNIST dataset is available in the Keras library. We can import the mnist data from keras.datasets

(X_train, Y_train), (X_test, Y_test) = mnist.load_data()Ouput:

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz 11493376/11490434 [==============================] – 0s 0us/

step 11501568/11490434 [==============================] – 0s 0us/step

After loading the data, we will explore the data by checking its dimension, the number of rows, and the count of training & testing data.

# Shape of the numpy arrays

print(X_train.shape, Y_train.shape, X_test.shape, Y_test.shape)Output

(60000, 28, 28) (60000,) (10000, 28, 28) (10000,)

From the output we can infer that there are 60,000 images in the training data and 10,000 images in the testing data. The images are in the dimension 28 x 28

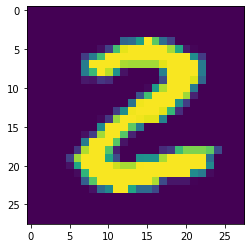

# Displaying the image

plt.imshow(X_train[25])plt.show()Output

# print the corresponding label

print(Y_train[25])Output

2

print(Y_train.shape, Y_test.shape)Output

(60000,) (10000,)

# Unique values in Y_train

print(np.unique(Y_train))

# Unique values in Y_test

print(np.unique(Y_test))Output

[0 1 2 3 4 5 6 7 8 9]

[0 1 2 3 4 5 6 7 8 9]

Image Processing

In image processing, we are transforming and scaling the data so that it fits within a specific scale.

# Scaling the values

X_train = X_train/255

X_test = X_test/255Building the Neural Network

We will create the most simple, single-layer neural network using Keras. The model is created as follows

# Setting up the layers of the Neural Network

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28,28)),

keras.layers.Dense(50, activation='relu'),

keras.layers.Dense(50, activation='relu'),

keras.layers.Dense(10, activation='sigmoid')])Once the model has been created, we have to compile and fit the model. During the process of fitting, the model will go through the dataset and understand the relations. It will learn throughout the process as many times as has been defined.

# Compiling the Neural Network

model.compile(optimizer='adam',

loss = 'sparse_categorical_crossentropy',

metrics=['accuracy'])Training the model

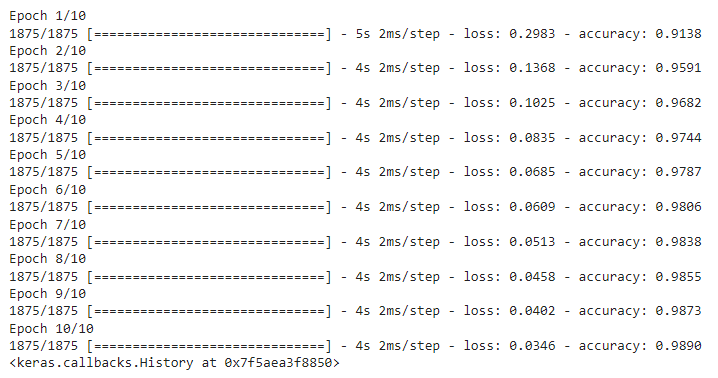

In our example, we have defined 10 epochs. During the process, the model will learn and also make mistakes. For every wrong prediction the model makes, there is a penalty and that is represented in the loss value for each epoch. In short, the model should generate as little loss and as high accuracy as possible at the end of the last epoch.

# Training the Neural Network

model.fit(X_train, Y_train, epochs=10)

We can see that the training data accuracy for our model is 98.9%.

Evaluating the model

loss, accuracy = model.evaluate(X_test, Y_test)

print(accuracy)Output

0.9710000157356262

Now, we will check an image from the testing data with our model.

# First data point in X_test

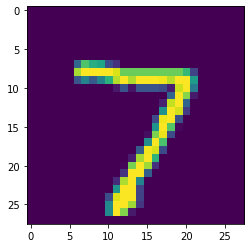

plt.imshow(X_test[0])

plt.show()Output

We can see that the first image in the testing set is 7.

print(Y_test[0])Output

7

Let us convert the prediction probabilities to a class label. Then, print the class label for the first image in the testing data.

Y_pred = model.predict(X_test)

label_for_first_test_image = np.argmax(Y_pred[0])

print(label_for_first_test_image)Output

7

model.predict() gives the prediction probability of each class for that data point.

print(Y_pred_labels)Output

[7, 2, 1, 0, 4, 1, 4, 9, 6, 9, 0, 6, 9, 0, 1, 5, 9, 7, 3, 4, 9, 6, 6, 5, 4, 0, 7, 4, 0, 1, 3, 1, 3, 4, 7, 2, 7, 1, 2, 1, 1, 7, 4, 2, 3, 5, 1, 2, 4, 4, 6, 3, 5, 5, 6, 0, 4, 1, 9, 5, 7, 8, 9, 3, 7, 4, 6, 4, 3, 0, 7, 0, 2, 9, 1, 7, 3, 2, 9,……..]

Y_test is the true labels and Y_pred_labels is the predicted labels.

#Confusion matrix

conf_mat = confusion_matrix(Y_test, Y_pred_labels)

print(conf_mat)Output

Lets visualize the confusion matrix as a heatmap for better understanding

plt.figure(figsize=(15,7))

sns.heatmap(conf_mat, annot=True, fmt='d', cmap='Blues')

plt.ylabel('True Labels')plt.xlabel('Predicted Labels')Output

Text(0.5, 42.0, ‘Predicted Labels’)

Building a Predictive System

Let’s build a prediction system which takes the input image from the user and make classification of the handwritten digit.

input_image_path = input('Path of the image to be predicted: ')

input_image = cv2.imread(input_image_path)

cv2_imshow(input_image)

grayscale = cv2.cvtColor(input_image, cv2.COLOR_RGB2GRAY)

input_image_resize = cv2.resize(grayscale, (28, 28))

input_image_resize = input_image_resize/255

image_reshaped = np.reshape(input_image_resize, [1,28,28])

input_prediction = model.predict(image_reshaped)

input_pred_label = np.argmax(input_prediction)

print('The Handwritten Digit is recognised as ', input_pred_label)Output:

The Handwritten Digit is recognized as 3

Conclusion

In this article, a popular dataset called MNIST was taken to make the classification of handwritten digits from 0 to 9. The dataset was cleaned, scaled, and shaped. Using Keras, a CNN model was created and trained on the training dataset. Finally, predictions were made using the trained model.

And yay! we have successfully completed our deep learning project on MNIST handwritten digit classification. Hope you have enjoyed doing this project!

Also Read:

- Download 1000+ Projects, All B.Tech & Programming Notes, Job, Resume & Interview Guide, and More – Get Your Ultimate Programming Bundle!

- Flower classification using CNN

- Music Recommendation System in Machine Learning

- 100+ Java Projects for Beginners 2023

- Courier Tracking System in HTML CSS and JS

- Test Typing Speed using Python App

- Top 15 Machine Learning Projects in Python with source code

- Top 15 Java Projects For Resume

- Top 10 Java Projects with source code

- Best 100+ Python Projects with source code

- Gender Recognition by Voice using Python

- Top 15 Python Libraries For Data Science in 2022

- Top 15 Python Libraries For Machine Learning in 2022

- Drawing Application in Python Tkinter

- Top 10 Final Year Projects for Computer Science Students

- Setup and Run Machine Learning in Visual Studio Code

- Diabetes prediction using Machine Learning

- Library Management System Project in Java

- Bank Management System Project in Java

- CS Class 12th Python Projects

- 15 Deep Learning Projects for Final year

- Machine Learning Scenario-Based Questions

- Customer Behaviour Analysis – Machine Learning and Python

- NxNxN Matrix in Python 3

- 3 V’s of Big data

- Naive Bayes in Machine Learning

- Top 10 Python Projects for Final year Students

- Automate Data Mining With Python

- Support Vector Machine(SVM) in Machine Learning

- Python OOP Projects | Source code and example